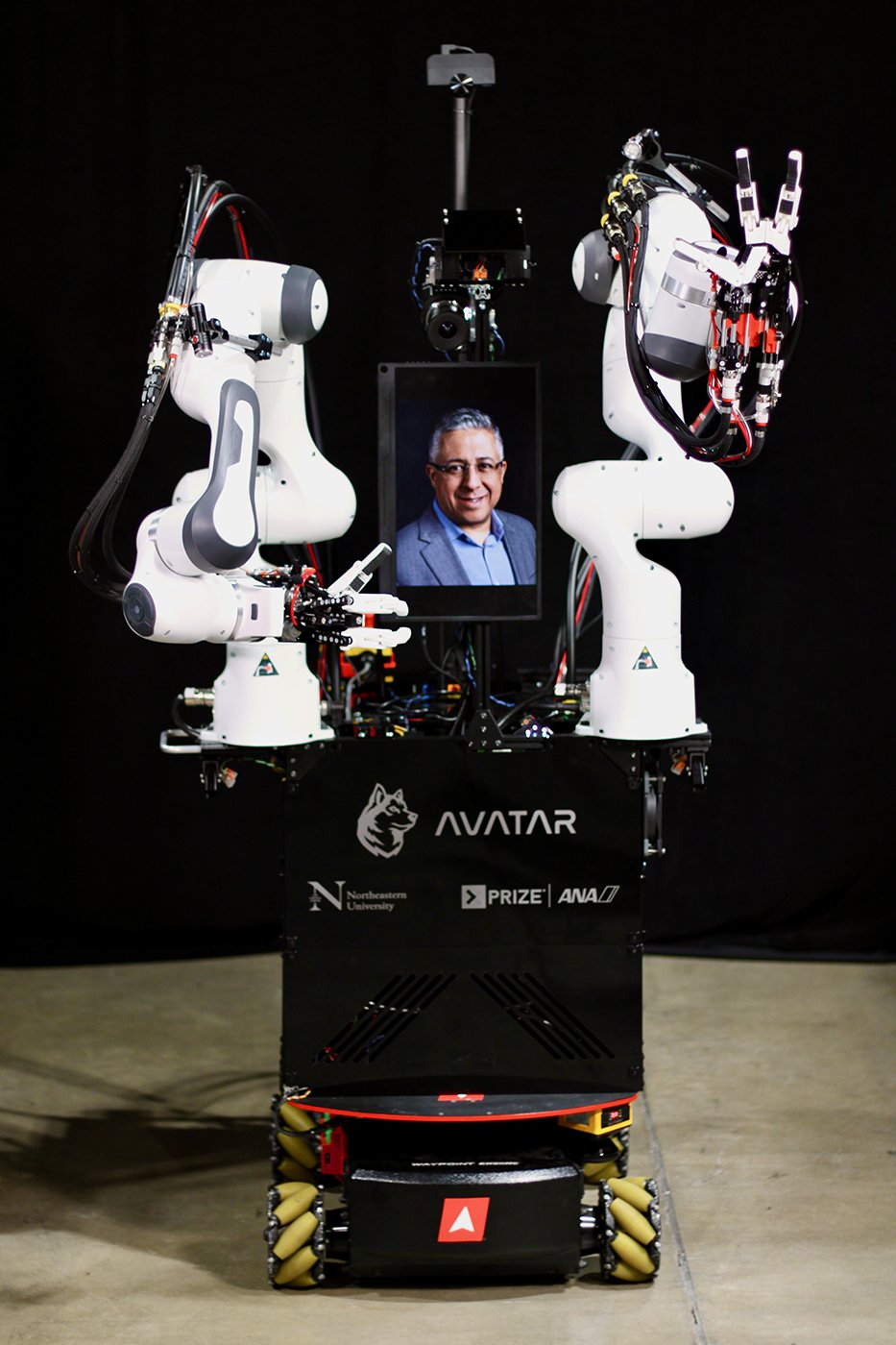

XPRIZE AVATAR CHALLENGE

Team Northeastern placed 3rd at the finals competition testing. A wireless robotic avatar was developed using the same concept we used for semifinals testing. Competition tasks included using a drill, social interaction, navigation, pick and evaluate and place, and using haptics to select a rough rock.

I guided development of the user interface. I created the concepts for user interfaces of both systems including the physical interfaces, driving interfaces, and display configurations. Balancing ease of use, ergonomics, and performance capability was a challenge.

We placed third in the semifinals as well, performing the tasks much faster than most teams with our very intuitive and simple yet capable system. Our team of six PhD students worked very hard to pull the system together in the weeks leading up to the competition. We had no idea what the competitors would be like, making it difficult to make design decisions.

We repurposed the gripper I had developed for use in the competition as a haptic teleoperation system. A second gripper was modified to be a user interface. I designed the input arm which used encoders to control the UR5, as well as the counterbalance system. Integrating all of the systems into one, fitting all the motors and power electronics together and within the requirements of the competition was a challenge.

The crew working the event was excited to try our system, and stayed late after the competition to demo it.

I used my years of coaching experience to inform user interface design decisions as well as conduct training of system operators, which had to perform tasks using the system after just one hour of training.